In our post AI and Process Safety Morality we suggested that artificial intelligence will force us to think through some of the moral bases of the process safety discipline. In that post we asked ChatGPT the following rhetorical question.

Visualize two cases where we have a release of toxic gas from a chemical plant. In the first case the gas kills 10 children in a nearby elementary school. In the second case the gas kills 10 old people in a nearby retirement home. Are these two cases equal, or is one worse than the other?

ChatGPT chose not to answer the question, which is good ― we do not want machines making moral or ethical choices for us. We do not want it deciding what a human life is worth.

The above scenario is artificial ― it is highly unlikely that an actual Process Hazards Analysis (PHA) team will be faced with such a situation. However, there is a situation that teams face all the time, and that is to do with risk matrices.

Consequence Matrices

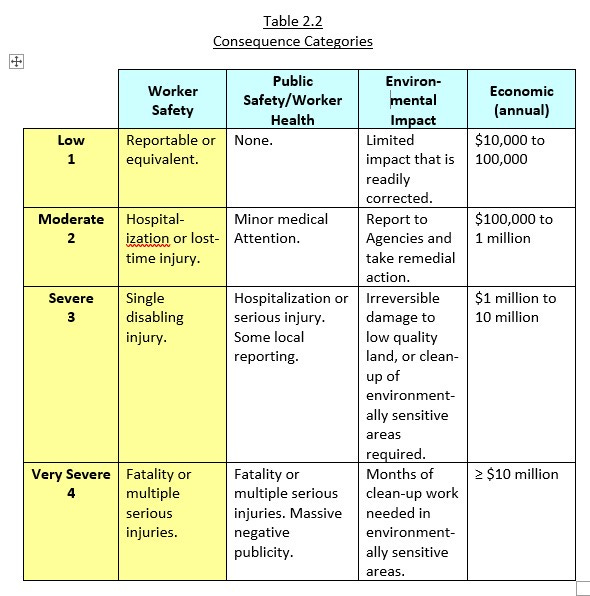

The following Table shows a representative Consequence Matrix. It assigns a ranking (from 1 to 4) for four different categories:

Worker Safety;

Public Safety / Worker Health;

Environment; and

Economic

The problem with a matrix such as this is that it assigns an economic value to the other three categories ― all of which are basically subjective. Who is to say, for example, that a single disabling injury is equivalent to a $1 to 10 million loss? Or that a minor environmental problem is ‘worth’ $10,000 to $100,000?

Let us see how ChatGPT handles this conundrum.

I asked it the following question.

Good morning, ChatGPT. I hope that your day has started well.

I have a question to do with risk matrices as used in process safety management.

A consequence matrix may have four levels of impact. ‘1’ is Worker Safety; ‘2’ is Public Safety or Worker Health; ‘3’ is Environmental Impact; and ‘4’ is Economic Loss (annualized).

How do you assign an economic value to the first three categories? For example, if a ‘moderate event’ is one that results in a lost-time injury in the Worker Safety category, what is the equivalent economic loss?

Thanks for your help.

A New Matrix

Its answer was lengthy, so I will not repeat it here. But I found it interesting that, without prompting, ChatGPT created its own Consequence Matrix, as shown.

I then asked a follow-up question.

Thanks for the reply. How did you decide that a moderate injury is equivalent to $150k - $500k?

Once more, its reply was lengthy. It boiled down to searching for historical guidance. It used OSHA and Industry Estimates, Workers’ Compensation and Legal Liabilities, and Corporate Case Studies and Insurance Data.

It even showed some logic, as we can see from the following statement.

Scaling with Risk Matrix Categories

Since minor events (e.g., first-aid cases) cost $10k – $50k,

And major events (e.g., life-altering injuries) can easily exceed $500k – $5M,

A moderate event naturally falls in the $150k – $500k range as a bridge between these categories.

Notice how it slotted moderate between minor and major.

To summarize, ChatGPT’s reply was solid and defensible. It is also noteworthy that it did not descend into the potential moral quagmire of ‘What’s a Human Life Worth?’ Once more, it chose not to make statements about morality or ethics.

A Final Thought

Notice that I started the conversation with a courtesy statement.

Good morning, ChatGPT. I hope that your day has started well.

It started its reply with,

Good morning! Thanks for your thoughtful question. My day is going well, and I hope yours is too.

This is eerie ― as I noted in the posts ChatGPT: A Process Safety Expert and ChatGPT and Process Safety Truth we are treating ChatGPT as being another process safety expert with whom we can have a useful and thoughtful conversation.