Our recent posts to do with the ChatGPT and its use in the process safety discipline have generated some useful discussion to do with information integrity. A concern is that these programs will provide guidance that is incorrect because it itself is using incorrect or biased information. Such mis-informed guidance could lead to serious process safety consequences, with possible follow-up litigation.

Therefore, I asked the program the following question,

Who is legally liable for the information that ChatGPT provides?

It provided the following response,

The legal liability for the information provided by ChatGPT typically rests with the user and the platform implementing ChatGPT, rather than with ChatGPT itself. ChatGPT is a tool created by OpenAI, and users and platform providers are responsible for how they use and implement the information generated by the model.

. . .

Keep in mind that while ChatGPT is designed to provide helpful information, it's not infallible, and users should exercise critical judgment and verify information when needed.

The Reciprocating Compressor

With regard to process safety, let us create a hypothetical situation.

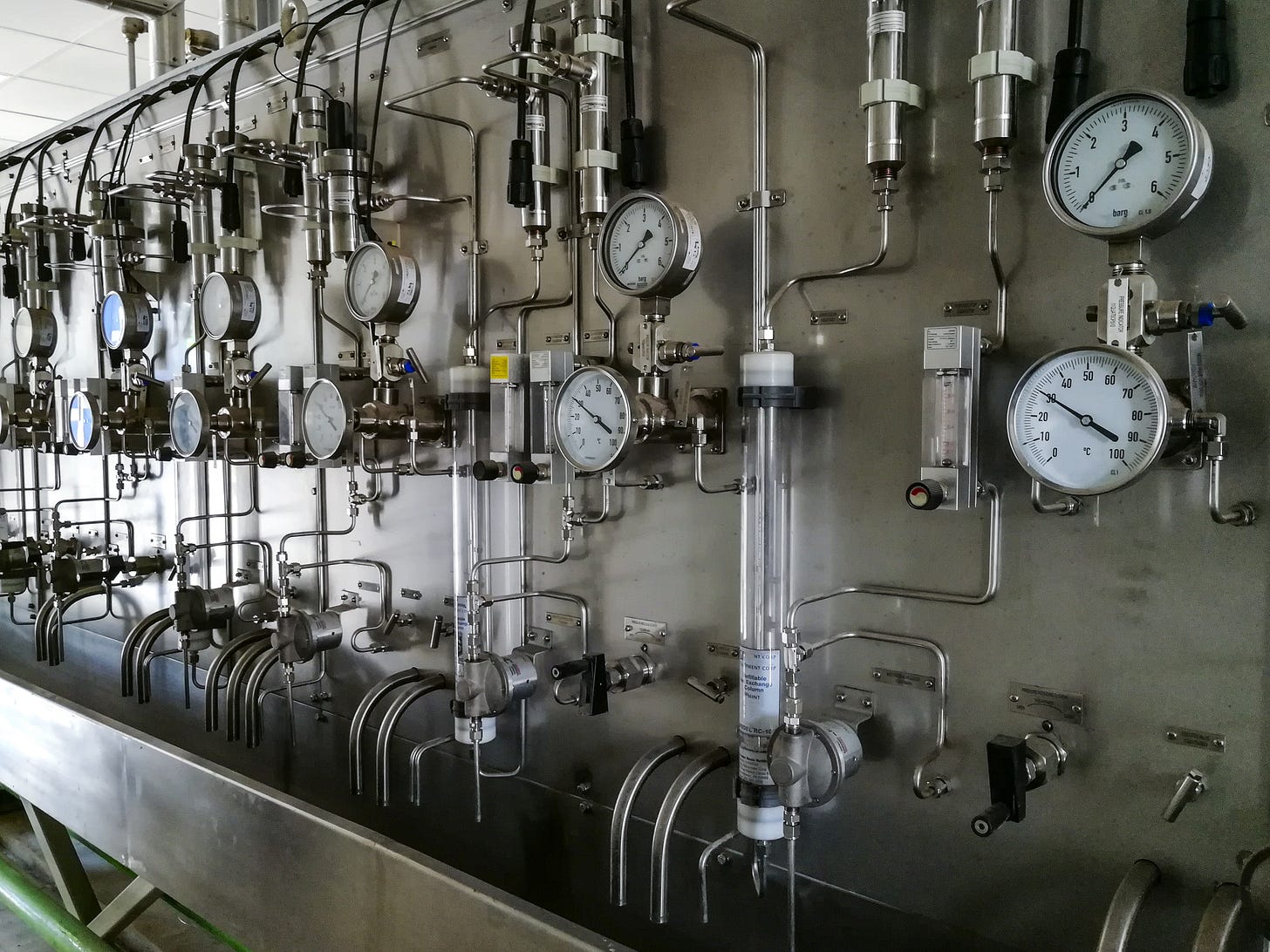

A process facility uses a large, multistage reciprocating compressor. The company decides to install new digital controls on the compressor.

A junior engineer is tasked with writing the start-up procedures for the modified machine. She uses ChatGPT to help with this assignment.

The start-up of the modified compressor goes badly awry due to incorrect instructions in the procedures. The technician who was starting the compressor read a pressure gauge that was specified in the procedures, but it was the wrong gauge. The cost of this error, which includes lost production and repair to the machine, runs into millions of dollars.

In the subsequent investigation it turns out that the engineer was provided with incorrect information by ChatGPT, but there is no way of tracking down the integrity of this information. Where did the information come from? Is it reliable? Could it be intentionally misleading? Was information from the compressor manufacturer used?

If the company decides to take legal action, who do they sue, and for what?

It could be argued that the company should have hired a compressor expert to check the start-up procedures. But, if that is the case, why bother using ChatGPT in the first place?

In this context I refer to the actual situation described in the post Bang Bang. An experienced technician used personal knowledge to diagnose a problem. His diagnosis was incorrect. But other human experts provided the correct response. However, if that technician had transferred his incorrect knowledge to an internet data base, the incorrect information could be used by ChatGPT or its internal equivalent. The software doesn’t know that the technician’s analysis was wrong.

The ChatGPT disclaimer states that ‘users should verify information’. But that begs the question, ‘How can we verify information when there are no citations, and there is no audit trail?’

Commercial Bias

A follow-on question to the above discussion is, ‘How do we know that the information provided by programs such as ChatGPT is not commercially biased?’.

I am currently involved in creating a church web site. There are at least half a dozen companies that provide web creation software that includes church templates. When I asked ChatGPT to provide help with this project it named just one of those companies.

How can we be sure that the system is not receiving biased information that favors one company over another?

Conclusions

In spite of the above concerns, I have found ChatGPT to be extremely helpful in process safety work. It seems to be most useful when handling abstract issues.

Our first post in this series was ChatGPT: A Process Safety Expert. When the program was asked,

What is the most important management element in a process safety management program?

it came back with a thoughtful and credible response.

In other words, the program is good for providing opinions and for handling subjective topics that do not call for right/wrong answers. But when it comes to concrete issues ― such as starting a million dollar compressor ― we need to be cautious.

There was an interesting study which showed that one LLM was quite willing to believe feedback telling it that its answer was incorrect, when the answer was actually correct. Reason for doubt that user feedback is an effective AI training method.

In preparing a paper that I am writing for the upcoming CCPS Global Congress, I spent considerable time "exercising" three common large language models (LLMs): ChatGPT (OpenAI); Bard (Google), and Copilot (Microsoft). It is hard not to anthropomorphize these AI programs; e.g., by viewing their output to be "opinions."

I asked each LLM: "Should I regard your responses to be your opinions?" Excerpts from their responses included:

ChatGPT: "No, my responses are not opinions; they are generated based on patterns and information in the data I was trained on. "

Bard: "No, you shouldn't consider my responses to be my opinions. "

Copilot: "Certainly! " But, when asked in follow-up "Do you have opinions?" the response was "As an AI language model, I don’t have personal opinions or feelings. " BTW, this demonstrates a common concern with LLMs -- inconsistency in answers.

LLMs are text generators. As one LLM described it: "“[M]y responses are generated based on patterns learned during training. The training process involves exposure to a diverse range of internet text, and the model learns to predict the next word in a sentence.” Sometimes those "patterns learned during training" result in the generation of incorrect or misleading text.