Meaning of AI

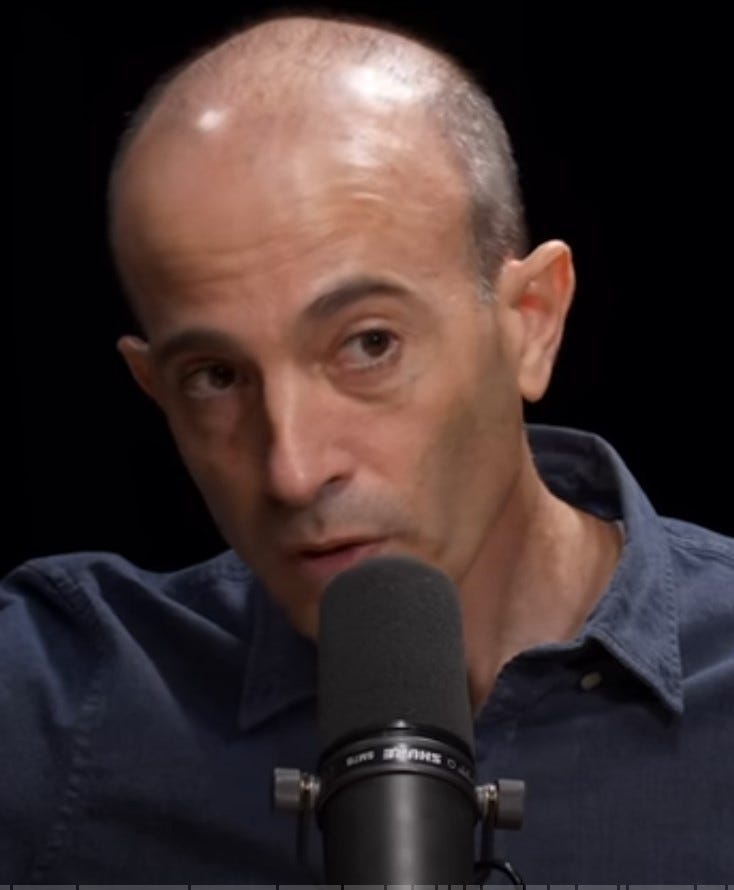

I had an Aha! moment the other day when I listened to a discussion ― Our AI Future Is Way Worse Than You Think ― between Yuval Noah Harari and Richard Roll. Harari stated that the letters ‘AI’ should really mean Alien Intelligence. He did not mean that AI is coming from another civilization outside the solar system. He meant that we humans have evolved organically. This evolution, including the development of our own intelligence, has been slow. AI, on the other hand, is alien because (a) it is not organic, and (b) it is growing extraordinarily quickly ― far too quickly for we mere humans to keep up.

AI and Process Safety

For many process safety professionals, their involvement with AI has been mostly with Large Language Models (LLM) such as ChatGPT. I myself have written almost twenty posts on the topic. At this level we are treating the LLM as a super-charged search engine. We enter a query, and the program provides an answer. The key point is that we are in control.

Harari goes well beyond this stage. He discusses how AI has already taken control of much of our lives without our permission. For example, social media algorithms work without human direction. They feed us posts and pages that they think will appeal to us. Given that anger and outrage generate clicks, the upshot is that the algorithms generate even more anger and outrage, which is one reason we are so divided socially. No human being chose to create this troublesome result. ‘It just happened.’

The ability of AI to take control without our permission could become a source of process safety events.

Here is a theoretical example. A process control system that uses AI may analyze a potentially dangerous condition and decide to shut down the unit for which it is responsible. However, it may not know that such a shutdown could cause more serious problems at other, connected facilities because it was not trained in what was going on at those facilities. Nevertheless, the AI will make its decisions, act on those decisions, and maybe not bother to tell the unit operators what it did.

Given concerns such as these, Harari argues that we should slow down the implementation of AI. But, because companies and nations are in competition with one another, their incentives are to move as quickly as possible. As Richard Roll said,

It’s all gas and no brakes.

I find that the model “trust, but verify” to be very very important in the evaluation of processes by Artificial Intelligence. We better not become complacent and let the computers handle the information without human intervention. My brother’s business, for example, uses LLMs for customer-facing emails, but with the requirement that one or two humans verify the correctness of the content before it is sent.

There are cases where someone caused a chatbot to sell them a car for $1 and similar and those cases, while seemingly fringe, may become the social norm in the near future.